The development of transformer-based large language models (LLMs) has significantly advanced AI-driven applications, particularly conversational agents. However, these models face inherent limitations due to their fixed context windows, which can lead to loss of relevant information over time. While Retrieval-Augmented Generation (RAG) methods provide external knowledge to supplement LLMs, they often rely on static document retrieval, which lacks the flexibility required for adaptive and evolving conversations.

MemGPT was introduced as an AI memory solution that extends beyond traditional RAG approaches, yet it still struggles with maintaining coherence across long-term interactions. In enterprise applications, where AI systems must integrate information from ongoing conversations and structured data sources, a more effective memory framework is needed—one that can retain and reason over time.

Introducing Zep: A Memory Layer for AI Agents

Zep AI Research presents Zep, a memory layer designed to address these challenges by leveraging Graphiti, a temporally-aware knowledge graph engine. Unlike static retrieval methods, Zep continuously updates and synthesizes both unstructured conversational data and structured business information.

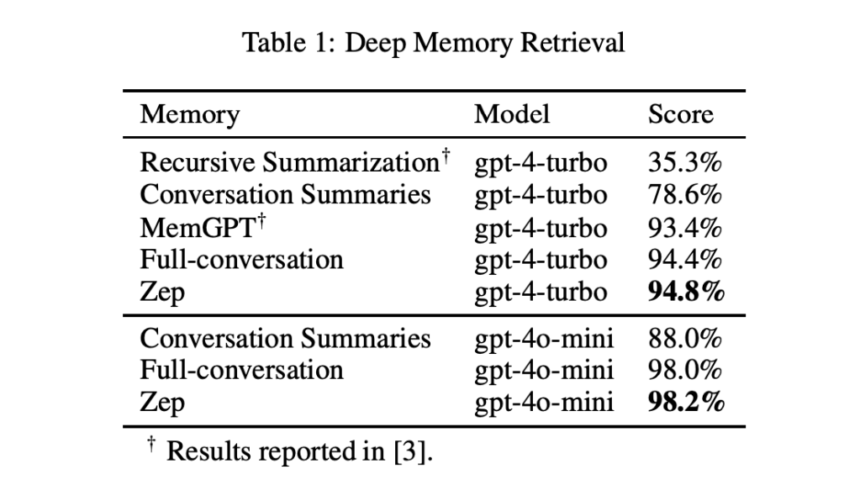

In benchmarking tests, Zep has demonstrated strong performance in the Deep Memory Retrieval (DMR) benchmark, achieving 94.8% accuracy, slightly surpassing MemGPT’s 93.4%. Additionally, it has proven effective in LongMemEval, a benchmark designed to assess AI memory in complex enterprise settings, showing accuracy improvements of up to 18.5% while reducing response latency by 90%.

Technical Design and Benefits

1. A Knowledge Graph Approach to Memory

Unlike traditional RAG methods, Zep’s Graphiti engine structures memory as a hierarchical knowledge graph with three key components:

- Episode Subgraph: Captures raw conversational data, ensuring a complete historical record.

- Semantic Entity Subgraph: Identifies and organizes entities to enhance knowledge representation.

- Community Subgraph: Groups entities into clusters, providing a broader contextual framework.

2. Handling Time-Based Information

Zep employs a bi-temporal model to track knowledge with two distinct timelines:

- Event Timeline (T): Orders events chronologically.

- System Timeline (T’): Maintains a record of how data has been stored and updated. This approach helps AI systems retain a meaningful understanding of past interactions while integrating new information effectively.

3. A Multi-Faceted Retrieval Mechanism

Zep retrieves relevant information using a combination of:

- Cosine Similarity Search (for semantic matching)

- Okapi BM25 Full-Text Search (for keyword relevance)

- Graph-Based Breadth-First Search (for contextual associations) These techniques allow AI agents to retrieve the most relevant information efficiently.

4. Efficiency and Scalability

By structuring memory in a knowledge graph, Zep reduces redundant data retrieval, leading to lower token usage and faster responses. This makes it well-suited for enterprise applications where cost and latency are critical factors.

Performance Evaluation

Zep’s capabilities have been validated through comprehensive testing in two key benchmarks:

1. Deep Memory Retrieval (DMR) Benchmark

DMR measures how well AI memory systems retain and retrieve past information. Zep achieved:

- 94.8% accuracy with GPT-4 Turbo, compared to 93.4% for MemGPT.

- 98.2% accuracy with GPT-4o Mini, demonstrating strong memory retention.

2. LongMemEval Benchmark

LongMemEval assesses AI agents in real-world business scenarios, where conversations can span over 115,000 tokens. Zep demonstrated:

- 15.2% and 18.5% accuracy improvements with GPT-4o Mini and GPT-4o, respectively.

- Significant latency reduction, making responses 90% faster than traditional full-context retrieval methods.

- Lower token usage, requiring only 1.6k tokens per response compared to 115k tokens in full-context approaches.

3. Performance Across Different Question Types

Zep showed strong performance in complex reasoning tasks:

- Preference-Based Questions: 184% improvement over full-context retrieval.

- Multi-Session Queries: 30.7% improvement.

- Temporal Reasoning: 38.4% improvement, highlighting Zep’s ability to track and infer time-sensitive information.

Conclusion

Zep provides a structured and efficient way for AI systems to retain and retrieve knowledge over extended periods. By moving beyond static retrieval methods and incorporating a dynamically evolving knowledge graph, it enables AI agents to maintain coherence across sessions and reason over past interactions.

With 94.8% DMR accuracy and proven effectiveness in enterprise-level applications, Zep represents an advancement in AI memory solutions. By optimizing data retrieval, reducing token costs, and improving response speed, it offers a practical and scalable approach to enhancing AI-driven applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

The post Zep AI Introduces a Smarter Memory Layer for AI Agents Outperforming the MemGPT in the Deep Memory Retrieval (DMR) Benchmark appeared first on MarkTechPost.