Large language models (LLMs) are the foundation for multi-agent systems, allowing multiple AI agents to collaborate, communicate, and solve problems. These agents use LLMs to understand tasks, generate responses, and make decisions, mimicking teamwork among humans. However, efficiency lags while executing these types of systems as they are based on fixed designs that do not change for all tasks, causing them to use too many resources to deal with simple and complex problems, thereby wasting computation, and leading to a slow response. This, therefore, creates major challenges while trying to balance precision, speed, and cost while handling diversified tasks.

Currently, multi-agent systems rely on existing methods like CAMEL, AutoGen, MetaGPT, DsPy, EvoPrompting, GPTSwarm, and EvoAgent, which focus on optimizing specific tasks such as prompt tuning, agent profiling, and communication. However, these methods struggle with adaptability. They follow pre-fixed designs without adjustments to diverse tasks, so handling complex and simple queries is somewhat inefficient. They lack flexibility through manual approaches, whereas an automated system can only target the search for the best configuration without dynamic readjustment toward efficiency. This makes these methods costly in computation and results in lower overall performance when applied to real-world challenges.

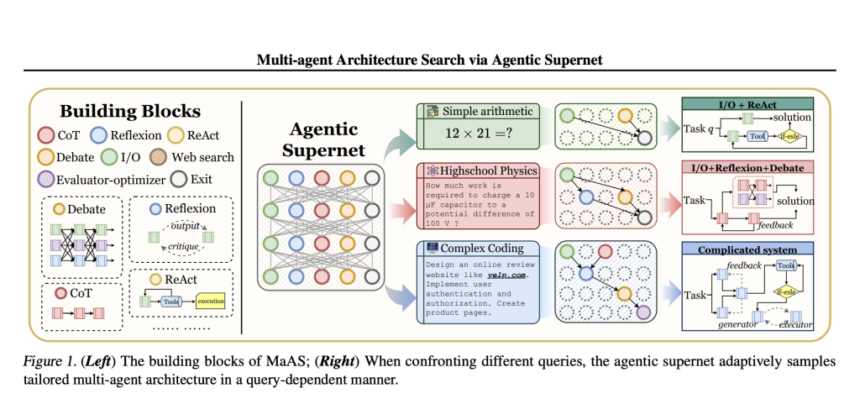

To address the limitations of existing multi-agent systems, researchers proposed MaAS (Multi-agent Architecture Search). This framework uses a probabilistic agentic supernet to generate query-dependent multi-agent architectures. Instead of selecting a fixed optimal system, MaAS dynamically samples customized multi-agent systems for each query, balancing performance and computational cost. The search space is defined by agentic operators, which are LLM-based workflows involving multiple agents, tools, and prompts. The supernet learns a distribution over possible agentic architectures, optimizing it based on task utility and cost constraints. A controller network samples architectures conditioned on the query, using a Mixture-of-Experts (MoE)-style mechanism for efficient selection. The framework performs optimization via a cost-aware empirical Bayes Monte Carlo, updating the agentic operators using textual gradient-based methods. The framework provides automated multi-agent evolution, allowing for efficiency and adaptability when handling diverse and complex queries.

Researchers evaluated MaAS on six public benchmarks across math reasoning (GSM8K, MATH, MultiArith), code generation (HumanEval, MBPP), and tool use (GAIA), comparing it with 14 baselines, including single-agent methods, handcrafted multi-agent systems, and automated approaches. MaAS consistently outperformed all baselines, achieving an average best score of 83.59% across tasks and a significant improvement of 18.38% on GAIA Level 1 tasks. Cost analysis showed MaAS is resource-efficient, requiring the least training tokens, lowest API costs, and shortest wall-clock time. Case studies highlighted its adaptability in dynamically optimizing multi-agent workflows.

In summary, the method fixed issues in traditional multi-agent systems using an agentic supernet that adjusted to different queries. This made the system work better, use resources wisely, and become more flexible and scalable. In future work, MaAS may be developed into a flexible yet extended framework for improving automation and self-organization in future work. Future work may also see optimizations in sampling strategies, improvements in domain adaptability, and incorporation of real-world constraints to boost collective intelligence.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

The post This AI Paper Introduces MaAS (Multi-agent Architecture Search): A New Machine Learning Framework that Optimizes Multi-Agent Systems appeared first on MarkTechPost.

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)