Large language models (LLMs) are developed specifically for math, programming, and general autonomous agents and require improvement in reasoning at test time. Various approaches include producing reasoning steps in response to some prompt or using sampling and training models to generate the same step. Reinforcement learning is more likely to give self-exploration and the ability to learn from feedback; however, their impact on complex reasoning has remained limited. Scaling LLMs at test time is still an issue because increased computational efforts do not necessarily translate to better models. Deep reasoning and longer responses can potentially improve performance, but it has been challenging to achieve this effectively.

Current methods for improving language model reasoning focus on imitation learning, where models replicate reasoning steps generated using prompts or rejection sampling. Pretraining on reasoning-related data and fine-tuning with reinforcement learning help improve understanding, but they do not scale well for complex reasoning. Post-training techniques like generating question-answer pairs and adding verifiers improve accuracy but rely heavily on external supervision. Scaling language models through more data and larger models enhances performance, but reinforcement learning-based scaling and test-time inference remain ineffective. Repeated sampling increases computational costs without enhancing reasoning ability, making current techniques inefficient for deeper reasoning and long-form responses.

To address these problems, Tsinghua University researchers and Zhipu AI proposed the T1 method. It enhances reinforcement learning by expanding the exploration scope and improving inference scaling. T1 begins with training the language model based on chain-of-thought data with trial-and-error and self-verification. This is normally denied during the training phase by existing methods. Thus, the model finds the correct answers and understands the steps taken to get to them. Unlike previous approaches focused on getting the right solutions, T1 encourages diverse reasoning paths by producing multiple responses to each prompt and analyzing errors before reinforcement learning. This framework enhances RL training in two ways: first, through oversampling, which increases response diversity, and second, by regulating training stability through an entropy-based auxiliary loss. Rather than maintaining a fixed reference model, T1 dynamically updates the reference model using exponential moving averages so that training cannot become rigid. T1 punishes redundant, overly long, or low-quality answers with a negative reward, keeping the model on track to meaningful reasoning.

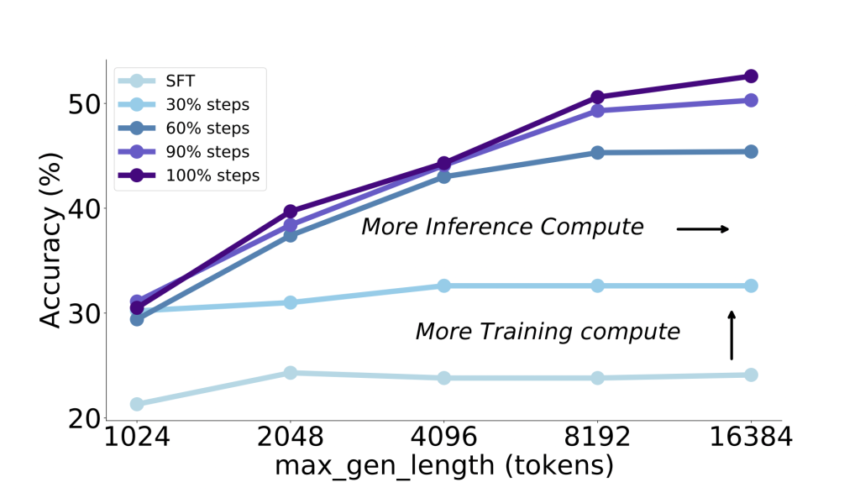

Researchers built T1 using open models like GLM-4-9B and Qwen2.5-14B/32B, focusing on mathematical reasoning through reinforcement learning (RL). They derived training data from MATH-train and NuminaMath, curating 30,000 instances by extracting answers and filtering noisy data. The supervised fine-tuning (SFT) used cosine decay scheduling, and RL training involved policy gradient descent with rewards based on correctness. Upon evaluation, T1 outperformed its baseline models in math benchmarks, with Qwen2.5-32B showing a 10-20% improvement over the SFT version. Increasing the number of sampled responses (K) enhanced exploration and generalization, especially for GPQA. A sampling temperature 1.2 stabilized training, while excessively high or low values led to performance issues. Penalties were applied during RL training to control response length and improve consistency. The results demonstrated significant performance gains with inference scaling, where more computational resources led to better results.

In conclusion, the proposed method T1 enhanced large language models through scaled reinforcement learning with exploration and stability. Penalties and oversampling could smooth out the influence of bottlenecked samples. It showed strong performance and promising scaling behavior. The approach to measuring inference scaling showed that further RL training improved reasoning accuracy and scaling trends. T1 surpasses state-of-the-art models on challenging benchmarks, overcoming weaknesses in current reasoning approaches. This work can be a starting point for further research, offering a framework to advance reasoning capabilities and scaling large language models.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted)

Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted)

The post This AI Paper from the Tsinghua University Propose T1 to Scale Reinforcement Learning by Encouraging Exploration and Understand Inference Scaling appeared first on MarkTechPost.