LLMs have demonstrated strong reasoning capabilities in domains such as mathematics and coding, with models like ChatGPT, Claude, and Gemini gaining widespread attention. The release of GPT -4 has further intensified interest in enhancing reasoning abilities through improved inference techniques. A key challenge in this area is enabling LLMs to detect and correct errors in their outputs—a process known as self-correction. While models can refine responses using external ground-truth reward signals, this approach introduces computational overhead, requiring running multiple models during inference. Studies have shown that accuracy can still improve even when reward feedback is derived from proxy models. However, without external guidance, current LLMs struggle to self-correct based solely on intrinsic reasoning. Recent efforts explore using LLMs as evaluators, where models generate reward signals through instruction-following mechanisms rather than pre-trained reward functions.

Related research on self-rewarding alignment has investigated methods for integrating response generation and evaluation within a single LLM. Iterative fine-tuning approaches enable models to label their outputs, providing learning signals that drive self-improvement. Self-correction studies have demonstrated that while teacher-assisted training enhances reflection in conversational tasks, intrinsic self-correction for reasoning remains unreliable without additional supervision. Most prior work depends on external reward models to determine when corrections should be made, leading to increased inference costs. Rule-based reinforcement learning has also been explored as an alternative, with recent advancements showing that certain pre-trained models naturally exhibit self-correction behaviors. However, replicating these results across different architectures remains challenging, as performance improvements are often linked to proprietary training data and specialized model design.

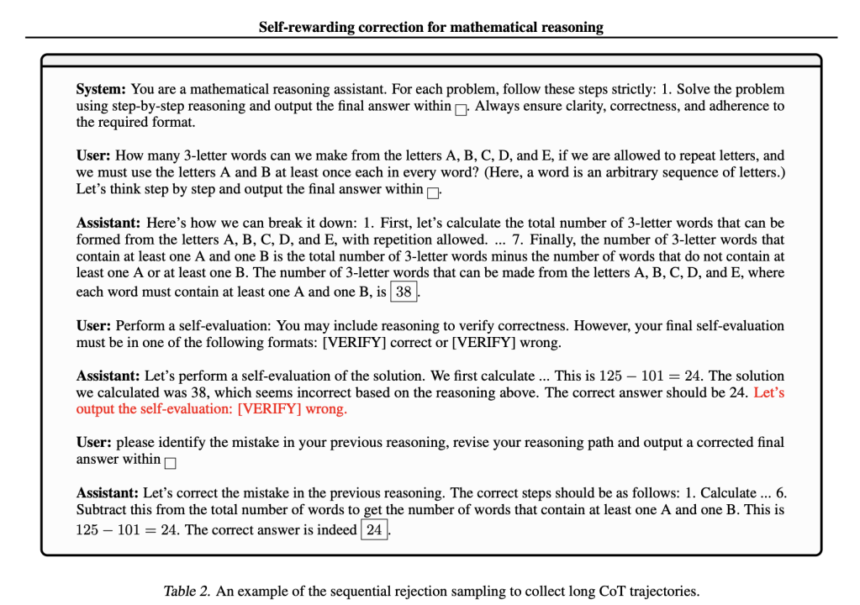

Researchers from the University of Illinois Urbana-Champaign and the University of Maryland, College Park, explore self-rewarding reasoning in LLMs, enabling them to generate reasoning steps, evaluate their correctness, and refine responses without external feedback. Their two-stage framework first uses sequential rejection sampling to construct long chain-of-thought (CoT) trajectories that embed self-rewarding and self-correction behaviors. Fine-tuning on this data helps models learn these patterns, which are further improved using reinforcement learning with rule-based signals. Experiments with Llama-3 and Qwen-2.5 show that this approach enhances self-correction and matches the performance of models relying on external rewards.

Self-rewarding reasoning in language models is framed as a multi-turn Markov Decision Process (MDP). The model generates an initial response and evaluates its answer. If deemed correct, it stops; otherwise, it refines the response iteratively. This approach follows a two-stage training framework: self-rewarding instruction fine-tuning (IFT) and RL. The IFT stage involves sequential rejection sampling to collect reasoning trajectories, while RL optimizes correctness assessment using KL-regularized training. Unlike traditional RLHF, this method employs oracle rewards to prevent reward hacking. Experiments demonstrate its effectiveness in improving mathematical reasoning accuracy through structured self-correction and verification processes.

The study evaluates mathematical reasoning models using datasets like MATH500, OlympiadBench, and Minerva Math, assessing performance through metrics such as initial and final accuracy, self-correction improvements, and reward model accuracy. Baseline methods like STaR/RAFT and intrinsic self-correction show limited effectiveness, often leading to unnecessary modifications and accuracy drops. In contrast, self-rewarding reasoning models consistently enhance accuracy and correction efficiency while minimizing incorrect changes. Fine-tuning on self-generated corrections significantly improves the model’s ability to refine errors without overcorrection. This approach outperforms traditional methods by integrating self-rewarding signals, leading to more reliable mathematical reasoning capabilities.

In conclusion, the study introduces a self-rewarding reasoning framework for LLMs, improving self-correction and computational efficiency. By integrating self-rewarding IFT and reinforcement learning, the model detects and refines errors using past attempts and internal reward signals. Experiments with Llama-3 and Qwen-2.5 show superior performance over intrinsic self-correction. Future improvements include addressing reward model accuracy issues, enhancing reinforcement learning in later training stages, and exploring multi-turn RL methods. A two-stage approach—sequential rejection sampling for reasoning patterns and reinforcement learning with rule-based signals—enables step-by-step correction without external feedback, offering a scalable, efficient solution for mathematical reasoning.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 80k+ ML SubReddit.

The post Self-Rewarding Reasoning in LLMs: Enhancing Autonomous Error Detection and Correction for Mathematical Reasoning appeared first on MarkTechPost.