Neuroprosthetic devices have significantly advanced brain-computer interfaces (BCIs), enabling communication for individuals with speech or motor impairments due to conditions like anarthria, ALS, or severe paralysis. These devices decode neural activity patterns by implanting electrodes in motor regions, allowing users to form complete sentences. Early BCIs were limited to recognizing basic linguistic elements, but recent developments in AI-driven decoding have achieved near-natural speech production speeds. Despite these advancements, invasive neuroprostheses require neurosurgical implantation, posing risks such as brain hemorrhage, infection, and long-term maintenance challenges. Consequently, their scalability for widespread use remains limited, particularly for non-responsive patient populations.

Non-invasive BCIs, primarily using scalp EEG, offer a safer alternative but suffer from poor signal quality, requiring users to perform cognitively demanding tasks for effective decoding. Even with optimized methods, EEG-based BCIs struggle with accuracy, limiting their practical usability. A potential solution lies in magnetoencephalography (MEG), which provides a superior signal-to-noise ratio compared to EEG. Recent AI models trained on MEG signals in language comprehension tasks have shown notable improvements in decoding accuracy. These findings suggest that integrating high-resolution MEG recordings with advanced AI models could enable reliable, non-invasive language production BCIs.

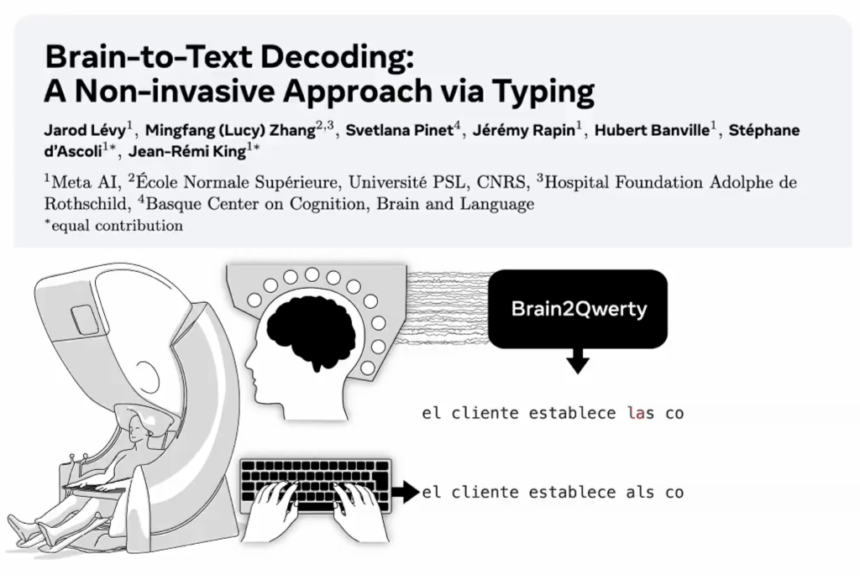

Researchers from Meta AI, École Normale Supérieure (Université PSL, CNRS), Hospital Foundation Adolphe de Rothschild, Basque Center on Cognition, Brain and Language, and Ikerbasque (Basque Foundation for Science) have developed Brain2Qwerty. This deep learning model decodes text production from non-invasive brain activity recordings. The study involved 35 participants who typed memorized sentences while their neural activity was recorded using EEG or MEG. Brain2Qwerty, trained on these signals, achieved a character-error rate (CER) of 32% with MEG, significantly outperforming EEG (67%). The findings bridge the gap between invasive and non-invasive BCIs, enabling potential applications for non-communicating patients.

The study explores decoding language production using non-invasive brain recordings via EEG and MEG while participants typed sentences. Thirty-five right-handed, native Spanish speakers typed words they heard, with brain activity recorded for nearly 18 and 22 hours for EEG and MEG, respectively. A custom, artifact-free keyboard was used. The Brain2Qwerty model, comprising convolutional and transformer modules, predicted keystrokes from neural signals, further refined by a character-level language model. Data preprocessing included filtering, segmentation, and scaling, while model training utilized cross-entropy loss and AdamW optimization. Performance was assessed using Hand Error Rate (HER) to compare with traditional BCI benchmarks.

To assess whether the typing protocol produces expected brain responses, researchers analyzed differences in neural activity for left- and right-handed key presses. MEG outperformed EEG in classifying hand movements and character decoding, with peak accuracies of 74% and 22%, respectively. The Brain2Qwerty deep learning model significantly improved decoding performance compared to baseline methods. Ablation studies confirmed the impact of its convolutional, transformer, and language model components. Further analysis showed that frequent words and characters were better decoded, and errors correlated with keyboard layout. These findings highlight Brain2Qwerty’s effectiveness in character decoding from neural signals.

In conclusion, the study introduces Brain2Qwerty, a method for decoding sentence production using non-invasive MEG recordings. Achieving an average CER of 32% significantly outperforms EEG-based approaches. Unlike prior studies on language perception, this model focuses on production, incorporating a deep learning framework and a pretrained character-level language model. While it advances non-invasive BCIs, challenges remain, including real-time operation, adaptability for locked-in individuals, and the non-wearability of MEG. Future work should enhance real-time processing, explore imagination-based tasks, and integrate advanced MEG sensors, paving the way for improved brain-computer interfaces for individuals with communication impairments.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 80k+ ML SubReddit.

The post Meta AI Introduces Brain2Qwerty: Advancing Non-Invasive Sentence Decoding with MEG and Deep Learning appeared first on MarkTechPost.