Brain-computer interfaces (BCIs) have seen significant progress in recent years, offering communication solutions for individuals with speech or motor impairments. However, most effective BCIs rely on invasive methods, such as implanted electrodes, which pose medical risks including infection and long-term maintenance issues. Non-invasive alternatives, particularly those based on electroencephalography (EEG), have been explored, but they suffer from low accuracy due to poor signal resolution. A key challenge in this field is improving the reliability of non-invasive methods for practical use. Meta AI’s research into Brain2Qwerty presents a step toward addressing this challenge.

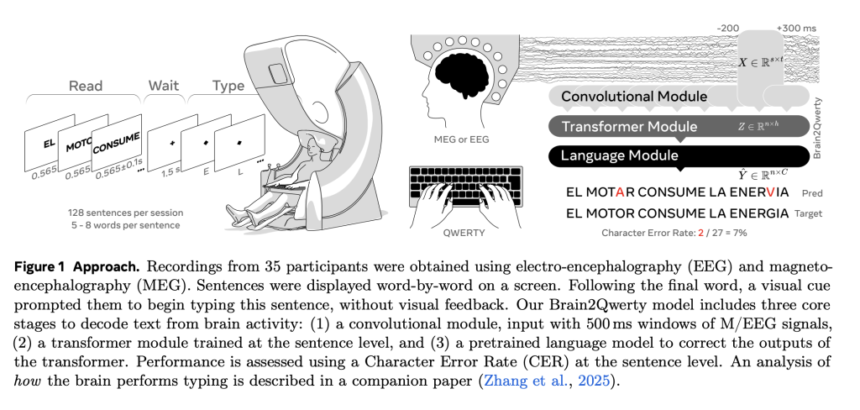

Meta AI introduces Brain2Qwerty, a neural network designed to decode sentences from brain activity recorded using EEG or magnetoencephalography (MEG). Participants in the study typed memorized sentences on a QWERTY keyboard while their brain activity was recorded. Unlike previous approaches that required users to focus on external stimuli or imagined movements, Brain2Qwerty leverages natural motor processes associated with typing, offering a potentially more intuitive way to interpret brain activity.

Model Architecture and Its Potential Benefits

Brain2Qwerty is a three-stage neural network designed to process brain signals and infer typed text. The architecture consists of:

- Convolutional Module: Extracts temporal and spatial features from EEG/MEG signals.

- Transformer Module: Processes sequences to refine representations and improve contextual understanding.

- Language Model Module: A pretrained character-level language model corrects and refines predictions.

By integrating these three components, Brain2Qwerty achieves better accuracy than previous models, improving decoding performance and reducing errors in brain-to-text translation.

Evaluating Performance and Key Findings

The study measured Brain2Qwerty’s effectiveness using Character Error Rate (CER):

- EEG-based decoding resulted in a 67% CER, indicating a high error rate.

- MEG-based decoding performed significantly better with a 32% CER.

- The most accurate participants achieved 19% CER, demonstrating the model’s potential under optimal conditions.

These results highlight the limitations of EEG for accurate text decoding while showing MEG’s potential for non-invasive brain-to-text applications. The study also found that Brain2Qwerty could correct typographical errors made by participants, suggesting that it captures both motor and cognitive patterns associated with typing.

Considerations and Future Directions

Brain2Qwerty represents progress in non-invasive BCIs, yet several challenges remain:

- Real-time implementation: The model currently processes complete sentences rather than individual keystrokes in real time.

- Accessibility of MEG technology: While MEG outperforms EEG, it requires specialized equipment that is not yet portable or widely available.

- Applicability to individuals with impairments: The study was conducted with healthy participants. Further research is needed to determine how well it generalizes to those with motor or speech disorders.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

The post Meta AI Introduces Brain2Qwerty: A New Deep Learning Model for Decoding Sentences from Brain Activity with EEG or MEG while Participants Typed Briefly Memorized Sentences on a QWERTY Keyboard appeared first on MarkTechPost.

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)