Artificial intelligence models face a fundamental challenge in efficiently scaling their reasoning capabilities at test time. While increasing model size often leads to performance gains, it also demands significant computational resources and extensive training data, making such approaches impractical for many applications. Traditional techniques, such as expanding model parameters or employing Chain-of-Thought (CoT) reasoning, rely on explicit verbalization of intermediate steps. However, these methods are constrained by context length limitations and the need for task-specific training. Researchers have been exploring alternative approaches that enable AI to reason more efficiently, focusing on internal computations rather than producing additional tokens.

Huginn-3.5B: A New Approach to Latent Reasoning

Researchers from ELLIS Institute Tübingen, Max-Planck Institute for Intelligent Systems, Tübingen AI Center, University of Maryland, College Park, and Lawrence Livermore National Laboratory have introduced Huginn-3.5B, a model designed to rethink test-time computation. Huginn-3.5B leverages a recurrent depth approach, allowing it to iterate over its latent space during inference. This method refines its hidden state iteratively, rather than generating more tokens, resulting in a more efficient and scalable reasoning process. The model can allocate additional computational effort for complex queries while maintaining efficiency for simpler tasks.

Key Features and Benefits

Huginn-3.5B’s core innovation lies in its depth-recurrent transformer architecture, which incorporates a looped processing unit. This mechanism enables the model to:

- Enhance reasoning dynamically: Huginn-3.5B adjusts its computational effort based on task complexity, iterating through latent space as needed.

- Reduce reliance on long context windows: Since reasoning occurs within the latent space, the model requires less memory and processing power.

- Function without specialized training data: Unlike Chain-of-Thought methods, Huginn-3.5B does not require explicit reasoning demonstrations to generalize effectively.

- Adapt compute per token: The model optimizes efficiency by determining how much computation each token requires.

- Facilitate efficient decoding: Huginn-3.5B refines its hidden state before generating output tokens, leading to improved coherence and reduced latency.

Performance Insights

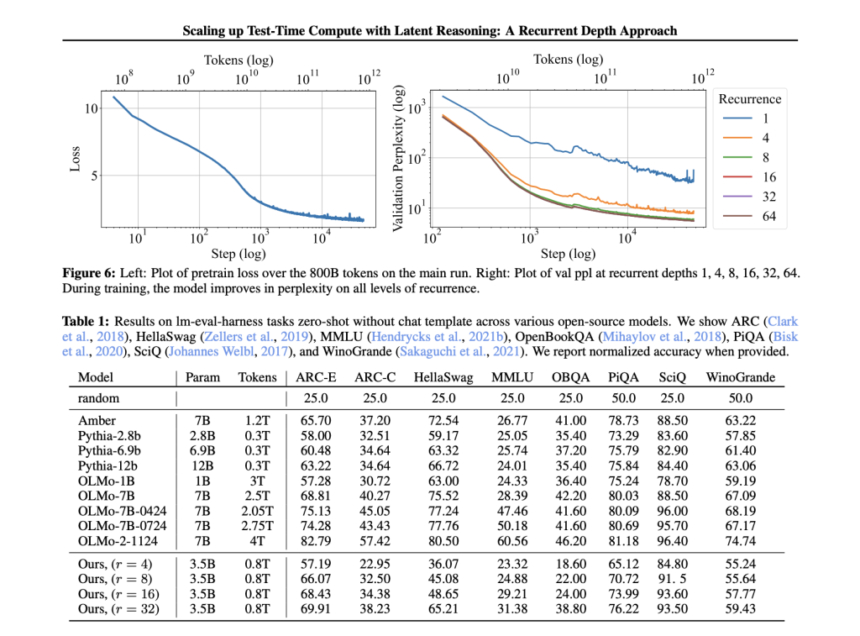

Trained on 800 billion tokens spanning general text, code, and mathematical reasoning, Huginn-3.5B was evaluated across various benchmarks. The findings include:

- Improved accuracy with increased computation: By iterating further in its latent space, Huginn-3.5B achieved performance levels comparable to much larger models.

- Competitiveness against similar-sized models: Huginn-3.5B outperformed Pythia-6.9B and Pythia-12B on reasoning benchmarks such as ARC and GSM8K.

- Task-dependent compute scaling: The model allocated additional resources to complex tasks like GSM8K while processing simpler tasks like OpenBookQA efficiently.

Conclusion: The Role of Latent Reasoning in AI

Huginn-3.5B offers an alternative perspective on AI reasoning by shifting from explicit token-based processing to computations within the latent space. This enables more efficient and adaptable test-time computation without necessitating larger models. As AI continues to evolve, recurrent depth reasoning may provide a promising direction, complementing existing scaling strategies while offering computational efficiency. Future research may further refine this approach, integrating it with mixture-of-expert models and fine-tuning techniques to enhance flexibility and performance.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post Meet Huginn-3.5B: A New AI Reasoning Model with Scalable Latent Computation appeared first on MarkTechPost.

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)