In this tutorial, we’ll walk through how to set up and perform fine-tuning on the Llama 3.2 3B Instruct model using a specially curated Python code dataset. By the end of this guide, you’ll have a better understanding of how to customize large language models for code-related tasks and practical insight into the tools and configurations needed to leverage Unsloth for fine-tuning.

Installing Required Dependencies

!pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git"

!pip install "git+https://github.com/huggingface/transformers.git"

!pip install -U trl

!pip install --no-deps trl peft accelerate bitsandbytes

!pip install torch torchvision torchaudio triton

!pip install xformers

!python -m xformers.info

!python -m bitsandbytesThese commands install and update all the necessary libraries—such as Unsloth, Transformers, and xFormers—needed for fine-tuning the Llama 3.2 3B Instruct model on Python code. Finally, we run diagnostic commands to verify the successful installation of xFormers and BitsAndBytes.

Essential Imports

from unsloth import FastLanguageModel

from trl import SFTTrainer

from transformers import TrainingArguments

import torch

from datasets import load_datasetWe import classes and functions from Unsloth, TRL, and Transformers for model training and fine-tuning. Also, we load a Python code dataset with Hugging Face’s `load_dataset` to prepare training samples.

Loading the Python Code Dataset

max_seq_length = 2048

dataset = load_dataset("user/Llama-3.2-Python-Alpaca-143k", split="train") #Save the dataset on your user profile on HF, then load the dataset on your user idWe set the sequence length to 2048 tokens for the fine-tuned model and load a custom Python code dataset from Hugging Face. Ensure you have the dataset stored under your username for proper access.

Initializing the Llama 3.2 3B Model

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "unsloth/Llama-3.2-3B-Instruct-bnb-4bit",

max_seq_length = max_seq_length,

dtype = None,

load_in_4bit = True

)

We load the Llama 3.2 3B Instruct model in 4-bit format using the Unsloth library, which reduces memory usage. To handle longer text inputs, we also set the maximum sequence length to 2048.

Configuring LoRA with Unsloth

model = FastLanguageModel.get_peft_model(

model,

r = 16,

target_modules = ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",],

lora_alpha = 16,

lora_dropout = 0, # Supports any, but = 0 is optimized

bias = "none", # Supports any, but = "none" is optimized

# [NEW] "unsloth" uses 30% less VRAM, fits 2x larger batch sizes!

use_gradient_checkpointing = "unsloth", # True or "unsloth" for very long context

random_state = 3407,

use_rslora = False, # We support rank stabilized LoRA

loftq_config = None, # And LoftQ

max_seq_length = max_seq_length

)

We apply LoRA (Low-Rank Adaptation) to our 4-bit loaded model, specifying the rank (r), alpha (lora_alpha), and dropout settings. The use_gradient_checkpointing = “unsloth” enables more efficient memory usage and allows training with longer context lengths. Additional LoRA options like use_rslora and loftq_config are available for more advanced fine-tuning techniques but are disabled here for simplicity. Finally, we set the maximum sequence length to match our earlier configuration.

Mounting Google Drive

from google.colab import drive

drive.mount("/content/drive")We import the Google Colab drive module to enable access to Google Drive from within the Colab environment.

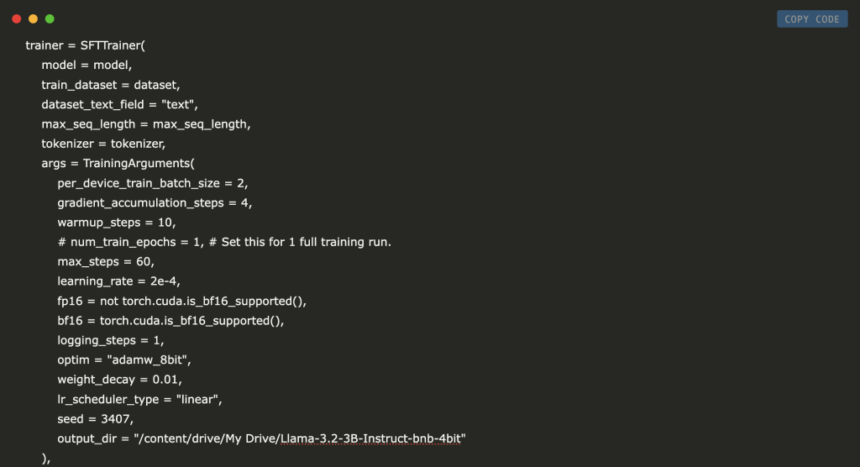

Setting Up and Running the Training Loop

trainer = SFTTrainer(

model = model,

train_dataset = dataset,

dataset_text_field = "text",

max_seq_length = max_seq_length,

tokenizer = tokenizer,

args = TrainingArguments(

per_device_train_batch_size = 2,

gradient_accumulation_steps = 4,

warmup_steps = 10,

# num_train_epochs = 1, # Set this for 1 full training run.

max_steps = 60,

learning_rate = 2e-4,

fp16 = not torch.cuda.is_bf16_supported(),

bf16 = torch.cuda.is_bf16_supported(),

logging_steps = 1,

optim = "adamw_8bit",

weight_decay = 0.01,

lr_scheduler_type = "linear",

seed = 3407,

output_dir = "/content/drive/My Drive/Llama-3.2-3B-Instruct-bnb-4bit"

),

)

trainer.train()

We create an instance of SFTTrainer with our loaded model, tokenizer, and Python code dataset, specifying the text field for training. The TrainingArguments define key hyperparameters such as batch size, learning rate, maximum training steps, and hardware-specific settings like fp16 or bf16. In this example, we set the output directory to Google Drive to conveniently store checkpoints and logs. Finally, we invoke the trainer.train() method to begin the fine-tuning process.

Saving the Fine-Tuned Model

model.save_pretrained("lora_model") # Local saving

tokenizer.save_pretrained("lora_model")We save the LoRA-trained model and its tokenizer to a local folder named lora_model. This allows you to load and use the fine-tuned model later without repeating the training process.

In conclusion, throughout this tutorial, we demonstrated how to fine-tune the Llama 3.2 3B Instruct model on a Python code dataset using the Unsloth library, LoRA, and efficient 4-bit quantization. By leveraging the provided scripts, you can train a smaller, memory-efficient model that excels at both generating and understanding Python code. In the process, we showcased the integration of Unsloth for optimized memory usage, LoRA for flexible model adaptation, and Hugging Face tools for dataset handling and training. This setup enables you to build and customize language models tailored to specific code-related tasks, improving accuracy and resource efficiency.

Download the Colab Notebook here. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

The post Fine-Tuning Llama 3.2 3B Instruct for Python Code: A Comprehensive Guide with Unsloth appeared first on MarkTechPost.