The task of training deep neural networks, especially those with billions of parameters, is inherently resource-intensive. One persistent issue is the mismatch between computation and communication phases. In conventional settings, forward and backward passes are executed sequentially, resulting in intervals where GPUs remain idle while data is exchanged or synchronized. These idle periods, or pipeline bubbles, not only extend training times but also increase memory demands. Moreover, the management of micro-batches can lead to unnecessary duplication of parameters, further straining the available resources. Finding a method to better align these phases is essential for improving efficiency and reducing training costs.

DeepSeek AI Releases DualPipe, a bidirectional pipeline parallelism algorithm for computation-communication overlap in V3/R1 training. Rather than adhering to a strict sequential order, DualPipe orchestrates forward and backward passes to occur in overlapping, bidirectional streams. This scheduling strategy is designed to harmonize the computation and communication phases so that while one set of micro-batches is engaged in forward processing, another is simultaneously undergoing backward computation.

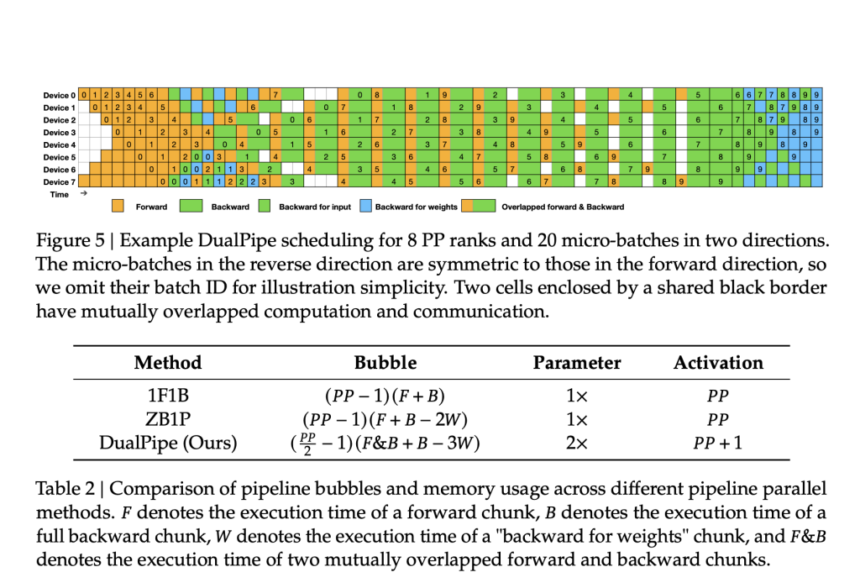

According to the DeepSeek-V3 Technical Report, this bidirectional design helps to reduce the traditional pipeline bubbles while optimizing memory usage. The system employs a symmetrical arrangement of micro-batches in both forward and reverse directions, allowing for a more consistent flow of data between GPUs. This alignment means that the hardware is in use more consistently, potentially leading to smoother and more efficient training cycles.

Technical Insights and Benefits

DualPipe achieves its efficiency by dividing the training process into a series of smaller micro-batches that are scheduled concurrently in both directions. The algorithm’s key innovation lies in its bidirectional scheduling mechanism. Unlike traditional methods—such as the simple one-forward, one-backward (1F1B) sequence or staggered variations like ZB1P—DualPipe minimizes idle time by allowing overlapping operations.

The GitHub documentation details a comparative approach:

- 1F1B: Executes forward and backward passes sequentially.

- ZB1P: Introduces a degree of staggering to mitigate idle time.

- DualPipe: Uses a dual-direction scheduling method, which is denoted in the documentation as “PP/2-1 (&+-3)”, indicating that the approach requires fewer pipeline stages while still accommodating an additional activation phase.

This nuanced method not only reduces idle periods but also offers a more balanced use of memory. Implemented with PyTorch 2.0 and above, DualPipe is compatible with current deep learning frameworks and is designed to integrate smoothly into existing training pipelines.

Observations and Comparative Data

The repository provides a clear example of how DualPipe schedules operations for a system with eight pipeline parallel ranks and twenty micro-batches. In this arrangement, micro-batches in the reverse direction mirror those in the forward direction, effectively reducing the usual delays observed in conventional pipelines. The schedule diagram, which highlights overlapping cells with a shared border, serves as a visual representation of how the communication and computation phases are interwoven.

Furthermore, the repository offers a comparative analysis of memory usage. Whereas methods like 1F1B and ZB1P require specific pipeline configurations, DualPipe’s approach—with a configuration denoted as “2× PP+1”—appears to use resources more judiciously. This efficient use of hardware can be especially beneficial in large-scale training environments, where even modest improvements can lead to significant time and cost savings.

Conclusion

DualPipe offers a thoughtful and well-engineered solution to one of the long-standing challenges in deep learning training. By overlapping the forward and backward passes and carefully coordinating communication with computation, the algorithm reduces idle time and optimizes resource utilization. This approach not only has the potential to shorten training times but also to lower the overall cost of deploying large models.

Check out the GitHub Repo. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 80k+ ML SubReddit.

The post DeepSeek AI Releases DualPipe: A Bidirectional Pipeline Parallelism Algorithm for Computation-Communication Overlap in V3/R1 Training appeared first on MarkTechPost.