Cerebras Systems, an AI hardware startup, announced the expansion of its data center footprint with six new AI data centers in North America and Europe, significantly increasing its inference capacity to over 40 million tokens per second. This announcement came on Tuesday and positions the company to compete more aggressively against Nvidia in the artificial intelligence market.

The new facilities will be established in Dallas, Minneapolis, Oklahoma City, Montreal, New York, and France, with 85% of the total capacity based in the United States. According to James Wang, director of product marketing at Cerebras, the company’s aim this year is to meet the expected surge in demand for inference tokens driven by new AI models like Llama 4 and DeepSeek.

The inference capacity will increase from 2 million to over 40 million tokens per second by Q4 2025 across the planned eight data centers. Wang highlighted that this strategic expansion is a critical part of the company’s initiative to deliver high-speed AI inference services, traditionally dominated by Nvidia’s GPU-based solutions.

Partnerships with Hugging Face and AlphaSense

In addition to the infrastructure expansion, Cerebras announced partnerships with Hugging Face, a prominent AI developer platform, and AlphaSense, a market intelligence platform. The integration with Hugging Face will enable its five million developers to access Cerebras Inference seamlessly, facilitating the usage of open-source models like Llama 3.3 70B.

Wang described Hugging Face as the “GitHub of AI,” noting that the integration allows developers to activate Cerebras services with a single click. The partnership with AlphaSense marks a significant transition for the financial intelligence platform, which is shifting from a leading AI model vendor to using Cerebras to enhance its AI-driven market intelligence search capabilities, reportedly speeding up processing times by tenfold.

Meta just tested its own AI chip: Is Nvidia in trouble?

Technological edge in AI inference

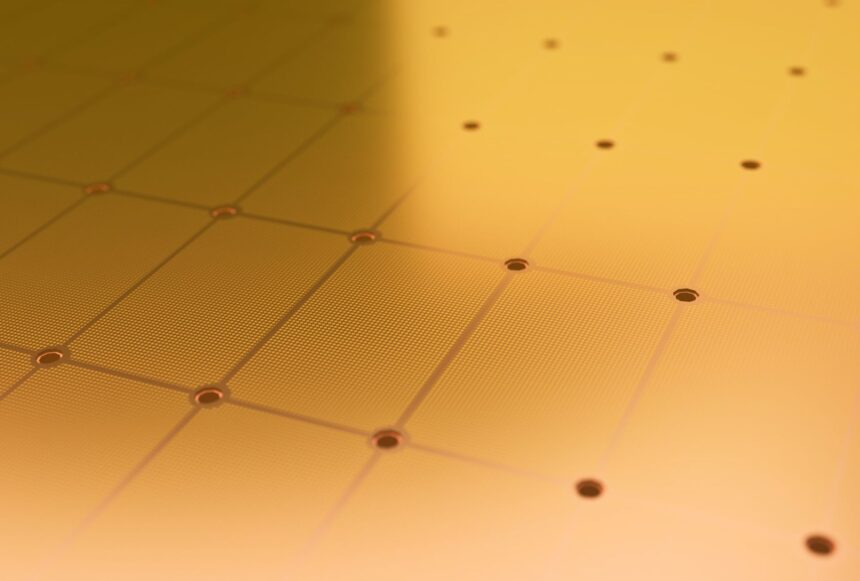

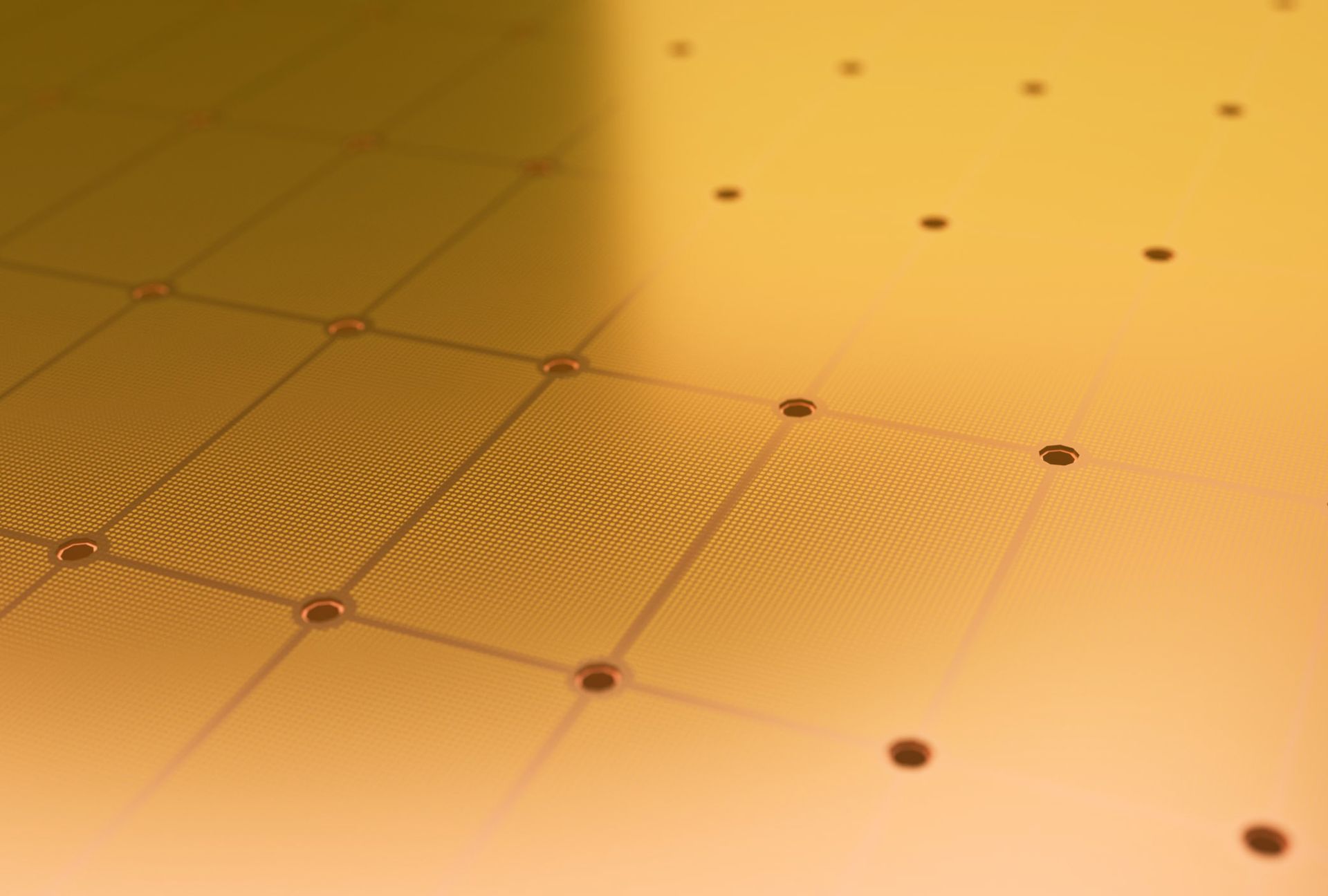

Cerebras aims to establish itself as a leader in high-speed AI inference by leveraging its Wafer-Scale Engine (WSE-3) processor, which is said to outperform GPU solutions by a factor of 10 to 70. This performance boost is particularly pertinent as AI models incorporate more complex reasoning capabilities, which generally result in slower processing times.

Wang indicated that although Nvidia acknowledges the significance of reasoning models, these typically require longer computation times. Cerebras has already partnered with companies like Perplexity AI and Mistral AI, utilizing its technology to enhance their AI search engines and assistants, respectively.

The Cerebras hardware reportedly achieves inference speeds up to 13 times faster than conventional GPU-based solutions across multiple AI models, including Llama 3.3 70B and DeepSeek-R1 70B.

The economic advantages of Cerebras’ offering are also significant. Wang stated that Meta’s Llama 3.3 70B model, optimized for Cerebras systems, delivers performance comparable to OpenAI’s GPT-4 while incurring significantly lower operational costs. He noted that the operational cost for GPT-4 is approximately $4.40, while for Llama 3.3 it is around 60 cents, representing a potential cost reduction by nearly an order of magnitude when adopting Cerebras technology.

Cerebras also plans to invest in disaster-resistant infrastructure for its expansion, with a new facility in Oklahoma City designed to withstand extreme weather conditions. Scheduled to open in June 2025, this facility will feature over 300 Cerebras CS-3 systems, triple-redundant power stations, and specialized water-cooling systems to support its hardware.

This expansion reflects Cerebras’ strategy to maintain a competitive edge in an AI hardware market largely dominated by Nvidia. Wang asserts that the skepticism surrounding the company’s customer uptake has been dispelled by the diversity of its client base, as it aims to enhance performance in sectors including voice and video processing, reasoning models, and coding applications.

With 85% of its capacity located in the U.S., Cerebras is positioning itself as a significant contributor to domestic AI infrastructure, a priority as discussions around technological sovereignty grow. Dhiraj Mallick, COO of Cerebras Systems, stated that the new data centers will play a vital role in the evolution of AI innovation.

Featured image credit: Cerebras/Unsplash