The study examines the concept of agency, defined as a system’s ability to direct outcomes toward a goal, and argues that determining whether a system exhibits agency is inherently dependent on the reference frame used for assessment. By analyzing essential properties of agency, the study contends that any evaluation of agency must consider the perspective from which it is measured, implying that agency is not an absolute attribute but varies with different frames of reference. This perspective has significant implications for fields like reinforcement learning, where understanding and defining agency is crucial.

Researchers from Google DeepMind and the University of Alberta examine the concept of agency—the capacity of a system to direct outcomes toward a goal—through the lens of reinforcement learning. They argue that assessing agency is inherently frame-dependent, meaning it must be evaluated relative to a specific reference frame. Analyzing the essential properties of agency proposed in prior studies demonstrates that each property is frame-dependent. They conclude that any foundational study of agency must account for this frame-dependence and discuss the implications for reinforcement learning.

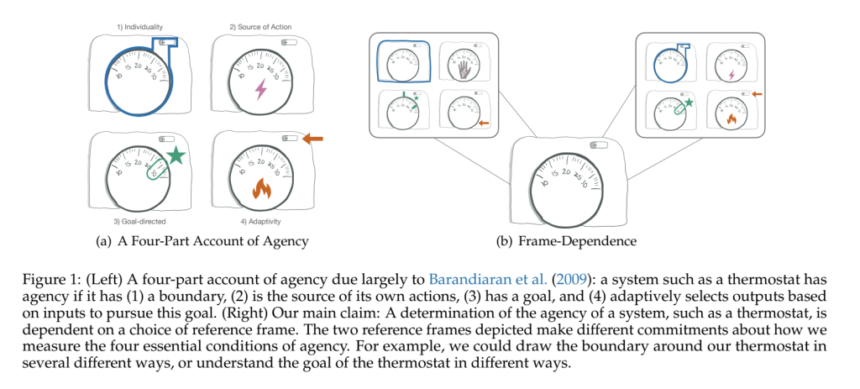

The study posits that determining whether a system exhibits agency is inherently reliant on the chosen reference frame. The authors argue that the four essential properties of agency—individuality, source of action, normativity, and adaptivity—are each contingent upon arbitrary commitments that define this reference frame. For instance, establishing a system’s boundary (individuality) or identifying meaningful goal-directed behavior (normativity) requires subjective decisions, concluding that agency cannot be universally measured without considering these contextual frames.

This perspective has significant implications for RL. In RL, agents are designed to make decisions to achieve specific goals. Recognizing that the agency is frame-dependent suggests that evaluating an RL agent’s behavior and effectiveness may vary based on the chosen reference frame. Consequently, developing a foundational science of agency necessitates acknowledging and incorporating frame dependence into the analysis and design of RL systems.

Agency, the capacity of a system to direct outcomes toward a goal, is a central topic in fields like biology, philosophy, cognitive science, and artificial intelligence. Determining whether a system exhibits agency is challenging, as it often depends on the perspective from which it’s evaluated. This perspective, or reference frame, influences how we interpret a system’s actions and goals. For instance, a thermostat can be seen as goal-directed, aiming to maintain a set temperature, but this interpretation depends on how we define goal-directedness. Similarly, a rock rolling down a hill might be viewed as having the goal of reaching the bottom, but this is also a perspective-dependent interpretation.

The agency is closely linked to intelligence, but the relationship between the two is not yet well understood. Exploring this relationship through the lens of frame dependence offers a new frontier for understanding central concepts in reinforcement learning. A natural next step is to develop a precise definition of agent reference frames and formal proof supporting the claim that the agency is frame-dependent. Choosing an appropriate reference frame is crucial, and it is unclear which frame-selection principles are defensible and what implications these principles carry for the study of agents. Investigating, formalizing, and comparing different principles for selecting reference frames is an important direction for further research.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

The post Frame-Dependent Agency: Implications for Reinforcement Learning and Intelligence appeared first on MarkTechPost.

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)