Advancements in multimodal large language models have enhanced AI’s ability to interpret and reason about complex visual and textual information. Despite these improvements, the field faces persistent challenges, especially in mathematical reasoning tasks. Traditional multimodal AI systems, even those with extensive training data and large parameter counts, frequently struggle to accurately interpret and solve mathematical problems involving visual contexts or geometric configurations. Such limitations highlight the urgent need for specialized models capable of analyzing complex multimodal mathematical issues with greater accuracy, efficiency, and reasoning sophistication.

Researchers at Nanyang Technological University (NTU) introduced the MMR1-Math-v0-7B model and the specialized MMR1-Math-RL-Data-v0 dataset to address the above critical challenges. This pioneering model is tailored explicitly for mathematical reasoning within multimodal tasks, showcasing notable efficiency and state-of-the-art performance. MMR1-Math-v0-7B stands apart from previous multimodal models due to its ability to achieve leading performance using a remarkably minimal training dataset, thus redefining benchmarks within this domain.

The model has been fine-tuned using just 6,000 meticulously curated data samples from publicly accessible datasets. The researchers applied a balanced data selection strategy, emphasizing uniformity in terms of both problem difficulty and mathematical reasoning diversity. By systematically filtering out overly simplistic problems, NTU researchers ensured that the training dataset comprised problems that effectively challenged and enhanced the model’s reasoning capabilities.

The architecture of MMR1-Math-v0-7B is built upon the Qwen2.5-VL multimodal backbone and further refined using a novel training method known as Generalized Reward-driven Policy Optimization (GRPO). Leveraging GRPO allowed the researchers to efficiently train the model in a reinforcement learning setup over 15 epochs, taking approximately six hours on 64 NVIDIA H100 GPUs. The relatively short training period and efficient computational resource utilization underscores the model’s impressive capacity for rapid knowledge assimilation and generalization.

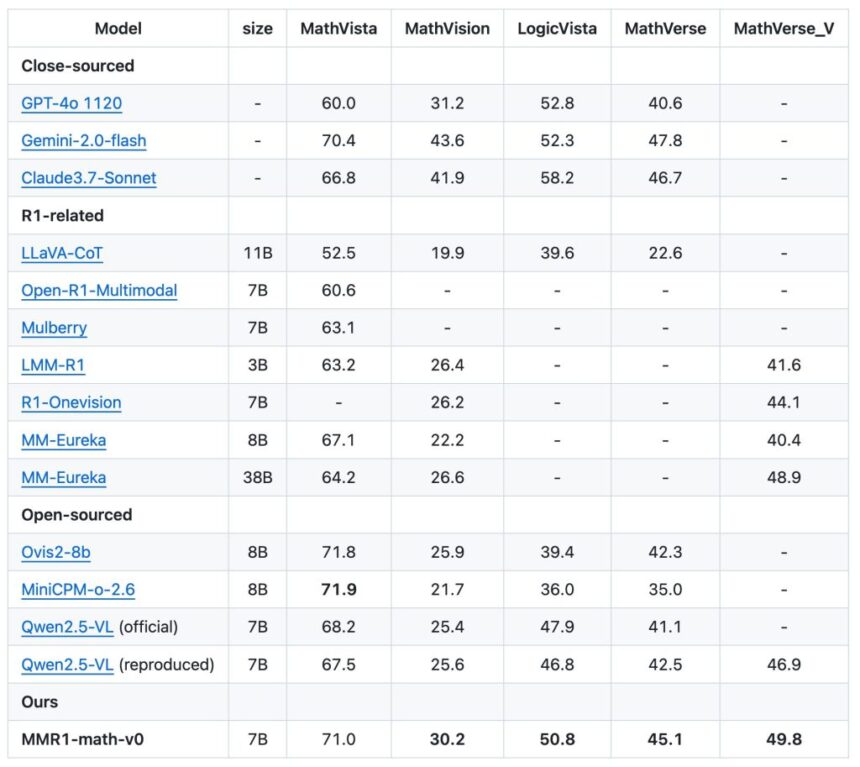

MMR1-Math-v0-7B was evaluated against established benchmarks using the standardized VLMEvalKit, focusing on multimodal mathematical reasoning tasks. The benchmarks included MathVista_MINI, MathVision, LogicVista, and MathVerse_MINI. MMR1-Math-v0-7B delivered groundbreaking results, surpassing existing open-source 7B models and rivaling even proprietary models with significantly larger parameters.

In particular, the model achieved 71.0% accuracy on MathVista, outperforming notable counterparts such as Qwen2.5-VL (68.2%) and LMM-R1 (63.2%). On MathVision, MMR1-Math-v0-7B scored 30.2%, notably surpassing other prominent models in the same parameter class. Also, in LogicVista and MathVerse, the model registered performance figures of 50.8% and 45.1%, respectively—superior to nearly all comparable models. These outcomes highlight MMR1-Math-v0-7B’s exceptional generalization and multimodal reasoning prowess in mathematical contexts.

Several Key Takeaways from this release include:

- The MMR1-Math-v0-7B model, developed by NTU researchers, sets a new state-of-the-art benchmark for multimodal mathematical reasoning among open-source 7B parameter models.

- Achieves superior performance using an exceptionally small training dataset of only 6,000 meticulously curated multimodal samples.

- After 6 hours of training on 64 NVIDIA H100 GPUs, an efficient reinforcement learning method (GRPO) performs robustly.

- The complementary MMR1-Math-RL-Data-v0 dataset, comprising 5,780 multimodal math problems, ensures diverse, balanced, and challenging content for model training.

- It Outperforms other prominent multimodal models across standard benchmarks, demonstrating exceptional efficiency, generalization, and reasoning capability in complex mathematical scenarios.

Check out the Hugging Face Page and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 80k+ ML SubReddit.

The post MMR1-Math-v0-7B Model and MMR1-Math-RL-Data-v0 Dataset Released: New State of the Art Benchmark in Efficient Multimodal Mathematical Reasoning with Minimal Data appeared first on MarkTechPost.

It’s operated using an easy-to-use CLI

It’s operated using an easy-to-use CLI  and native client SDKs in Python and TypeScript

and native client SDKs in Python and TypeScript  .

.