In this tutorial, we’ll learn how to build an interactive multimodal image-captioning application using Google’s Colab platform, Salesforce’s powerful BLIP model, and Streamlit for an intuitive web interface. Multimodal models, which combine image and text processing capabilities, have become increasingly important in AI applications, enabling tasks like image captioning, visual question answering, and more. This step-by-step guide ensures a smooth setup, clearly addresses common pitfalls, and demonstrates how to integrate and deploy advanced AI solutions, even without extensive experience.

!pip install transformers torch torchvision streamlit Pillow pyngrokFirst we install transformers, torch, torchvision, streamlit, Pillow, pyngrok, all necessary dependencies for building a multimodal image captioning app. It includes Transformers (for BLIP model), Torch & Torchvision (for deep learning and image processing), Streamlit (for creating the UI), Pillow (for handling image files), and pyngrok (for exposing the app online via Ngrok).

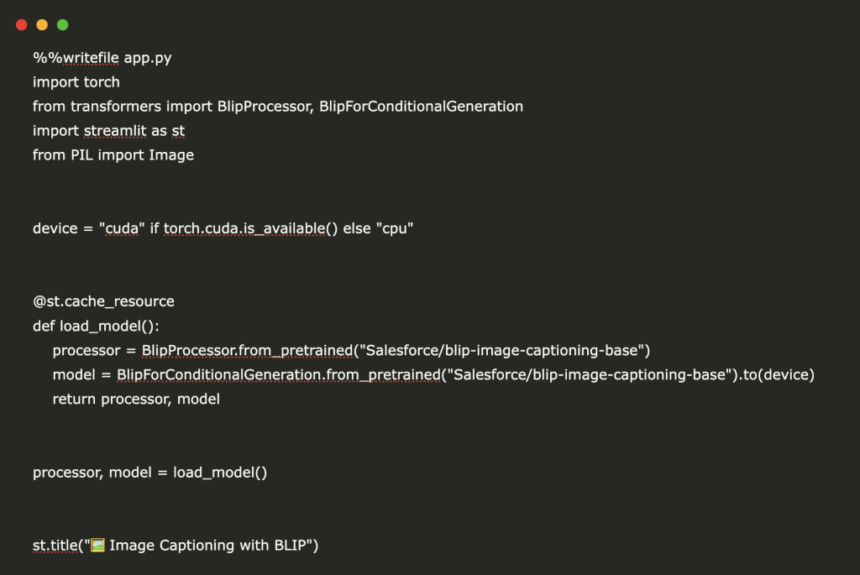

%%writefile app.py

import torch

from transformers import BlipProcessor, BlipForConditionalGeneration

import streamlit as st

from PIL import Image

device = "cuda" if torch.cuda.is_available() else "cpu"

@st.cache_resource

def load_model():

processor = BlipProcessor.from_pretrained("Salesforce/blip-image-captioning-base")

model = BlipForConditionalGeneration.from_pretrained("Salesforce/blip-image-captioning-base").to(device)

return processor, model

processor, model = load_model()

st.title(" Image Captioning with BLIP")

uploaded_file = st.file_uploader("Upload your image:", type=["jpg", "jpeg", "png"])

if uploaded_file is not None:

image = Image.open(uploaded_file).convert('RGB')

st.image(image, caption="Uploaded Image", use_column_width=True)

if st.button("Generate Caption"):

inputs = processor(image, return_tensors="pt").to(device)

outputs = model.generate(**inputs)

caption = processor.decode(outputs[0], skip_special_tokens=True)

st.markdown(f"###

Image Captioning with BLIP")

uploaded_file = st.file_uploader("Upload your image:", type=["jpg", "jpeg", "png"])

if uploaded_file is not None:

image = Image.open(uploaded_file).convert('RGB')

st.image(image, caption="Uploaded Image", use_column_width=True)

if st.button("Generate Caption"):

inputs = processor(image, return_tensors="pt").to(device)

outputs = model.generate(**inputs)

caption = processor.decode(outputs[0], skip_special_tokens=True)

st.markdown(f"###  **Caption:** {caption}")

**Caption:** {caption}")Then we create a Streamlit-based multimodal image captioning app using the BLIP model. It first loads the BLIPProcessor and BLIPForConditionalGeneration from Hugging Face, allowing the model to process images and generate captions. The Streamlit UI enables users to upload an image, displays it, and generates a caption upon clicking a button. The use of @st.cache_resource ensures efficient model loading, and CUDA support is utilized if available for faster processing.

from pyngrok import ngrok

NGROK_TOKEN = "use your own NGROK token here"

ngrok.set_auth_token(NGROK_TOKEN)

public_url = ngrok.connect(8501)

print(" Your Streamlit app is available at:", public_url)

# run streamlit app

!streamlit run app.py &>/dev/null &

Your Streamlit app is available at:", public_url)

# run streamlit app

!streamlit run app.py &>/dev/null &Finally, we set up a publicly accessible Streamlit app running in Google Colab using ngrok. It does the following:

- Authenticates ngrok using your personal token (`NGROK_TOKEN`) to create a secure tunnel.

- Exposes the Streamlit app running on port `8501` to an external URL via `ngrok.connect(8501)`.

- Prints the public URL, which can be used to access the app in any browser.

- Launches the Streamlit app (`app.py`) in the background.

This method lets you interact remotely with your image captioning app, even though Google Colab does not provide direct web hosting.

In conclusion, we’ve successfully created and deployed a multimodal image captioning app powered by Salesforce’s BLIP and Streamlit, hosted securely via ngrok from a Google Colab environment. This hands-on exercise demonstrated how easily sophisticated machine learning models can be integrated into user-friendly interfaces and provided a foundation for further exploring and customizing multimodal applications.

Here is the Colab Notebook. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 80k+ ML SubReddit.

The post A Coding Guide to Build a Multimodal Image Captioning App Using Salesforce BLIP Model, Streamlit, Ngrok, and Hugging Face appeared first on MarkTechPost.