Understanding long-form videos—ranging from minutes to hours—presents a major challenge in computer vision, especially as video understanding tasks expand beyond short clips. One of the key difficulties lies in efficiently identifying the few relevant frames from thousands within a lengthy video necessary to answer a given query. Most VLMs, such as LLaVA and Tarsier, process hundreds of tokens per image, making frame-by-frame analysis of long videos computationally expensive. To address this, a new paradigm known as temporal search has gained prominence. Unlike traditional temporal localization, which typically identifies continuous segments within a video, temporal search aims to retrieve a sparse set of highly relevant frames dispersed across the entire timeline—akin to finding a “needle in a haystack.”

While advancements in attention mechanisms and video transformers have improved temporal modeling, these methods still face limitations in capturing long-range dependencies. Some approaches attempt to overcome this by compressing video data or selecting specific frames to reduce the input size. Although benchmarks for long-video understanding exist, they mostly evaluate performance based on downstream question-answering tasks rather than directly assessing the effectiveness of temporal search. In contrast, the emerging focus on keyframe selection and fine-grained frame retrieval—ranging from glance-based to caption-guided methods—offers a more targeted and efficient approach to understanding long-form video content.

Stanford, Northwestern, and Carnegie Mellon researchers revisited temporal search for long-form video understanding, introducing LV-HAYSTACK—a large benchmark with 480 hours of real-world videos and over 15,000 annotated QA instances. They frame the task as finding a few key frames from thousands, highlighting the limitations of current models. To address this, they propose T, a framework that reimagines temporal search as a spatial search using adaptive zoom-in techniques across time and space. T significantly boosts performance while reducing computational cost, improving the accuracy of models like GPT-4o and LLaVA-OV using far fewer frames.

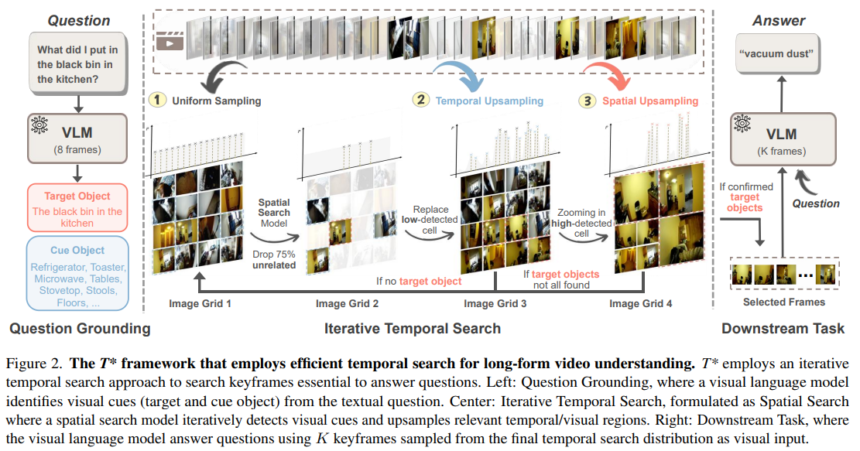

The study introduces a Temporal Search (TS) task to enhance video understanding in long-context visual language models. The goal is to select a minimal keyframe from a video that retains all information necessary to answer a given question. The proposed T framework performs this using three stages: question grounding, iterative temporal search, and task completion. It identifies relevant objects in the question, locates them across frames using a spatial search model, and updates a frame sampling strategy based on confidence scores. Evaluated on the LV-HAYSTACK benchmark, T shows improved efficiency and accuracy with significantly lower computational costs.

The study evaluates the proposed T temporal search framework across multiple datasets and tasks, including LV-HAYSTACK, LongVideoBench, VideoMME, NExT-QA, EgoSchema, and Ego4D LongVideo QA. T is integrated into open-source and proprietary vision-language models, consistently improving performance, especially in long videos and limited frame scenarios. It uses attention, object detection, or trained models for efficient keyframe selection, achieving high accuracy with reduced computational cost. Experiments show that T progressively aligns sampling with relevant frames over iterations, approaches human-level performance with more frames, and significantly outperforms uniform and retrieval-based sampling methods across various evaluation benchmarks.

In conclusion, the work tackles the challenge of understanding long-form videos by revisiting temporal search methods used in state-of-the-art VLMs. The authors frame the task as the “Long Video Haystack” problem—identifying a few relevant frames from tens of thousands. They introduce LV-HAYSTACK, a benchmark with 480 hours of video and over 15,000 human-annotated instances to support this. Findings show existing methods perform poorly. They propose T, a lightweight framework that transforms temporal search into a spatial problem using adaptive zooming techniques to address this. T significantly boosts the performance of leading VLMs under tight frame budgets, demonstrating its effectiveness.

Check out the Paper and Project Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post T* and LV-Haystack: A Spatially-Guided Temporal Search Framework for Efficient Long-Form Video Understanding appeared first on MarkTechPost.

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]