In today’s information-rich world, finding relevant documents quickly is crucial. Traditional keyword-based search systems often fall short when dealing with semantic meaning. This tutorial demonstrates how to build a powerful document search engine using:

- Hugging Face’s embedding models to convert text into rich vector representations

- Chroma DB as our vector database for efficient similarity search

- Sentence transformers for high-quality text embeddings

This implementation enables semantic search capabilities – finding documents based on meaning rather than just keyword matching. By the end of this tutorial, you’ll have a working document search engine that can:

- Process and embed text documents

- Store these embeddings efficiently

- Retrieve the most semantically similar documents to any query

- Handle a variety of document types and search needs

Please follow the detailed steps mentioned below in sequence to implement DocSearchAgent.

First, we need to install the necessary libraries.

!pip install chromadb sentence-transformers langchain datasetsLet’s start by importing the libraries we’ll use:

import os

import numpy as np

import pandas as pd

from datasets import load_dataset

import chromadb

from chromadb.utils import embedding_functions

from sentence_transformers import SentenceTransformer

from langchain.text_splitter import RecursiveCharacterTextSplitter

import timeFor this tutorial, we’ll use a subset of Wikipedia articles from the Hugging Face datasets library. This gives us a diverse set of documents to work with.

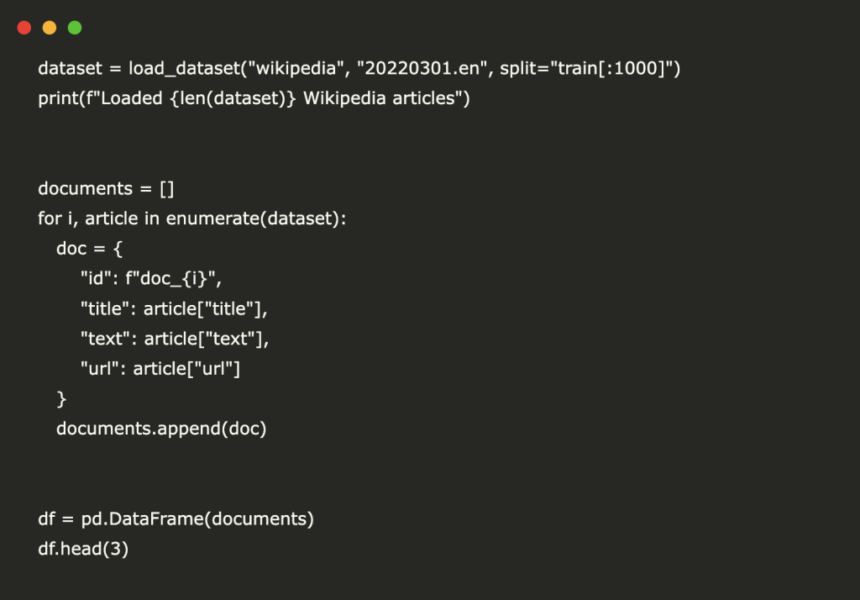

dataset = load_dataset("wikipedia", "20220301.en", split="train[:1000]")

print(f"Loaded {len(dataset)} Wikipedia articles")

documents = []

for i, article in enumerate(dataset):

doc = {

"id": f"doc_{i}",

"title": article["title"],

"text": article["text"],

"url": article["url"]

}

documents.append(doc)

df = pd.DataFrame(documents)

df.head(3)Now, let’s split our documents into smaller chunks for more granular searching:

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200,

length_function=len,

)

chunks = []

chunk_ids = []

chunk_sources = []

for i, doc in enumerate(documents):

doc_chunks = text_splitter.split_text(doc["text"])

chunks.extend(doc_chunks)

chunk_ids.extend([f"chunk_{i}_{j}" for j in range(len(doc_chunks))])

chunk_sources.extend([doc["title"]] * len(doc_chunks))

print(f"Created {len(chunks)} chunks from {len(documents)} documents")We’ll use a pre-trained sentence transformer model from Hugging Face to create our embeddings:

model_name = "sentence-transformers/all-MiniLM-L6-v2"

embedding_model = SentenceTransformer(model_name)

sample_text = "This is a sample text to test our embedding model."

sample_embedding = embedding_model.encode(sample_text)

print(f"Embedding dimension: {len(sample_embedding)}")Now, let’s set up Chroma DB, a lightweight vector database perfect for our search engine:

chroma_client = chromadb.Client()

embedding_function = embedding_functions.SentenceTransformerEmbeddingFunction(model_name=model_name)

collection = chroma_client.create_collection(

name="document_search",

embedding_function=embedding_function

)

batch_size = 100

for i in range(0, len(chunks), batch_size):

end_idx = min(i + batch_size, len(chunks))

batch_ids = chunk_ids[i:end_idx]

batch_chunks = chunks[i:end_idx]

batch_sources = chunk_sources[i:end_idx]

collection.add(

ids=batch_ids,

documents=batch_chunks,

metadatas=[{"source": source} for source in batch_sources]

)

print(f"Added batch {i//batch_size + 1}/{(len(chunks)-1)//batch_size + 1} to the collection")

print(f"Total documents in collection: {collection.count()}")Now comes the exciting part – searching through our documents:

def search_documents(query, n_results=5):

"""

Search for documents similar to the query.

Args:

query (str): The search query

n_results (int): Number of results to return

Returns:

dict: Search results

"""

start_time = time.time()

results = collection.query(

query_texts=[query],

n_results=n_results

)

end_time = time.time()

search_time = end_time - start_time

print(f"Search completed in {search_time:.4f} seconds")

return results

queries = [

"What are the effects of climate change?",

"History of artificial intelligence",

"Space exploration missions"

]

for query in queries:

print(f"nQuery: {query}")

results = search_documents(query)

for i, (doc, metadata) in enumerate(zip(results['documents'][0], results['metadatas'][0])):

print(f"nResult {i+1} from {metadata['source']}:")

print(f"{doc[:200]}...") Let’s create a simple function to provide a better user experience:

def interactive_search():

"""

Interactive search interface for the document search engine.

"""

while True:

query = input("nEnter your search query (or 'quit' to exit): ")

if query.lower() == 'quit':

print("Exiting search interface...")

break

n_results = int(input("How many results would you like? "))

results = search_documents(query, n_results)

print(f"nFound {len(results['documents'][0])} results for '{query}':")

for i, (doc, metadata, distance) in enumerate(zip(

results['documents'][0],

results['metadatas'][0],

results['distances'][0]

)):

relevance = 1 - distance

print(f"n--- Result {i+1} ---")

print(f"Source: {metadata['source']}")

print(f"Relevance: {relevance:.2f}")

print(f"Excerpt: {doc[:300]}...")

print("-" * 50)

interactive_search()

Let’s add the ability to filter our search results by metadata:

def filtered_search(query, filter_source=None, n_results=5):

"""

Search with optional filtering by source.

Args:

query (str): The search query

filter_source (str): Optional source to filter by

n_results (int): Number of results to return

Returns:

dict: Search results

"""

where_clause = {"source": filter_source} if filter_source else None

results = collection.query(

query_texts=[query],

n_results=n_results,

where=where_clause

)

return results

unique_sources = list(set(chunk_sources))

print(f"Available sources for filtering: {len(unique_sources)}")

print(unique_sources[:5])

if len(unique_sources) > 0:

filter_source = unique_sources[0]

query = "main concepts and principles"

print(f"nFiltered search for '{query}' in source '{filter_source}':")

results = filtered_search(query, filter_source=filter_source)

for i, doc in enumerate(results['documents'][0]):

print(f"nResult {i+1}:")

print(f"{doc[:200]}...") In conclusion, we demonstrate how to build a semantic document search engine using Hugging Face embedding models and ChromaDB. The system retrieves documents based on meaning rather than just keywords by transforming text into vector representations. The implementation processes Wikipedia articles chunks them for granularity, embeds them using sentence transformers, and stores them in a vector database for efficient retrieval. The final product features interactive searching, metadata filtering, and relevance ranking.

Here is the Colab Notebook. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 80k+ ML SubReddit.

The post A Coding Implementation to Build a Document Search Agent (DocSearchAgent) with Hugging Face, ChromaDB, and Langchain appeared first on MarkTechPost.