User analyses and benchmark tests reveal a split verdict between DeepSeek R1 and o3-mini, with developers and businesses prioritizing distinct strengths in AI model performance, pricing, and accessibility. People’s feedback highlights DeepSeek R1’s cost efficiency and technical innovation against o3-mini’s reliability and ecosystem integration. More on this below…

OpenAI o3-mini vs DeepSeek-R1: Which is better?

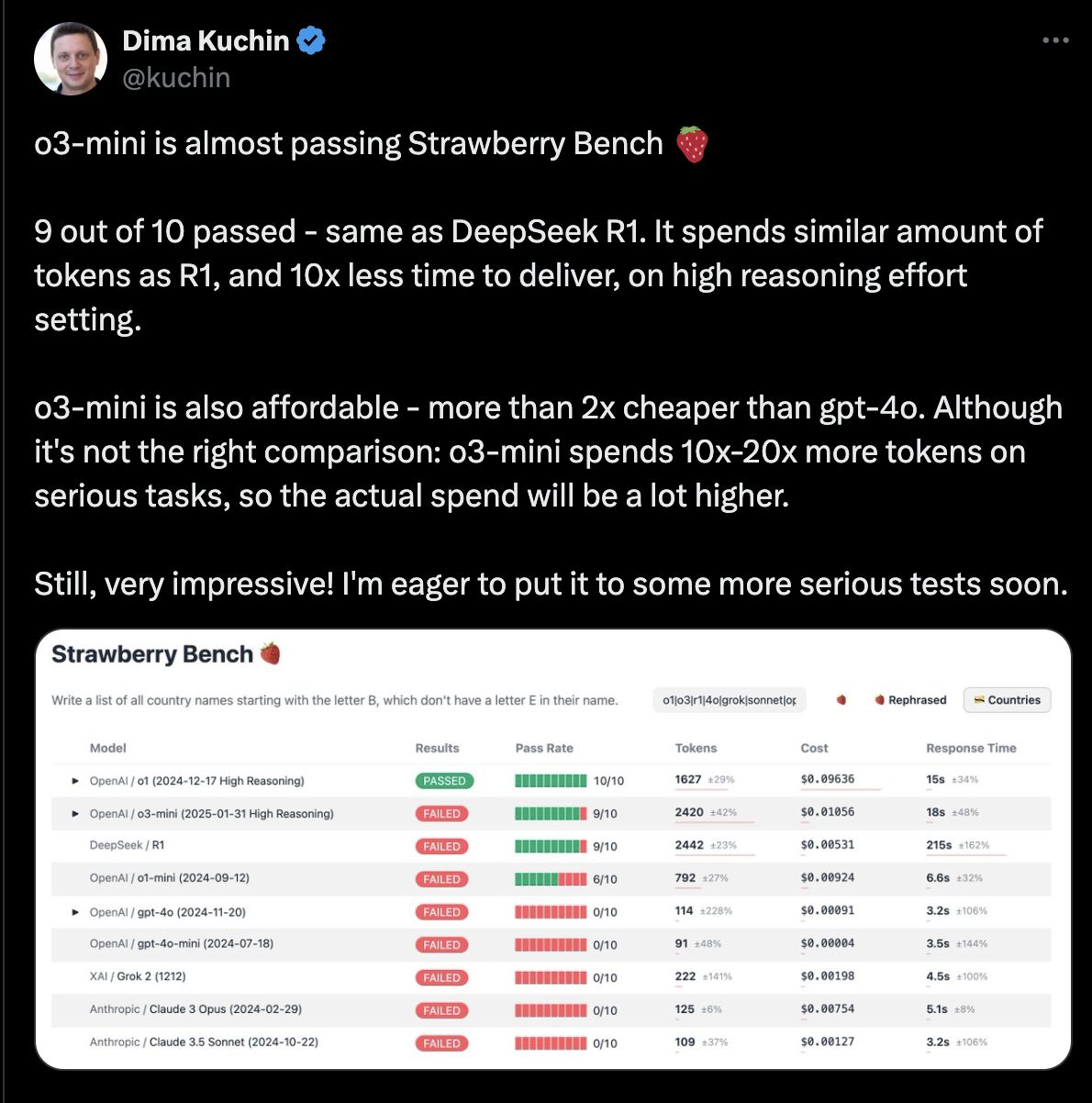

Performance benchmarks reveal divergent strengths

DeepSeek R1 scored 79.8% on the AIME math benchmark and 93% on MATH-500, outperforming competitors in complex reasoning tasks. Users praised its transparent “chain of thought” outputs, which researchers and academics find valuable for replicating results. However, testers noted inconsistencies in multi-turn conversations and occasional language mixing in responses.

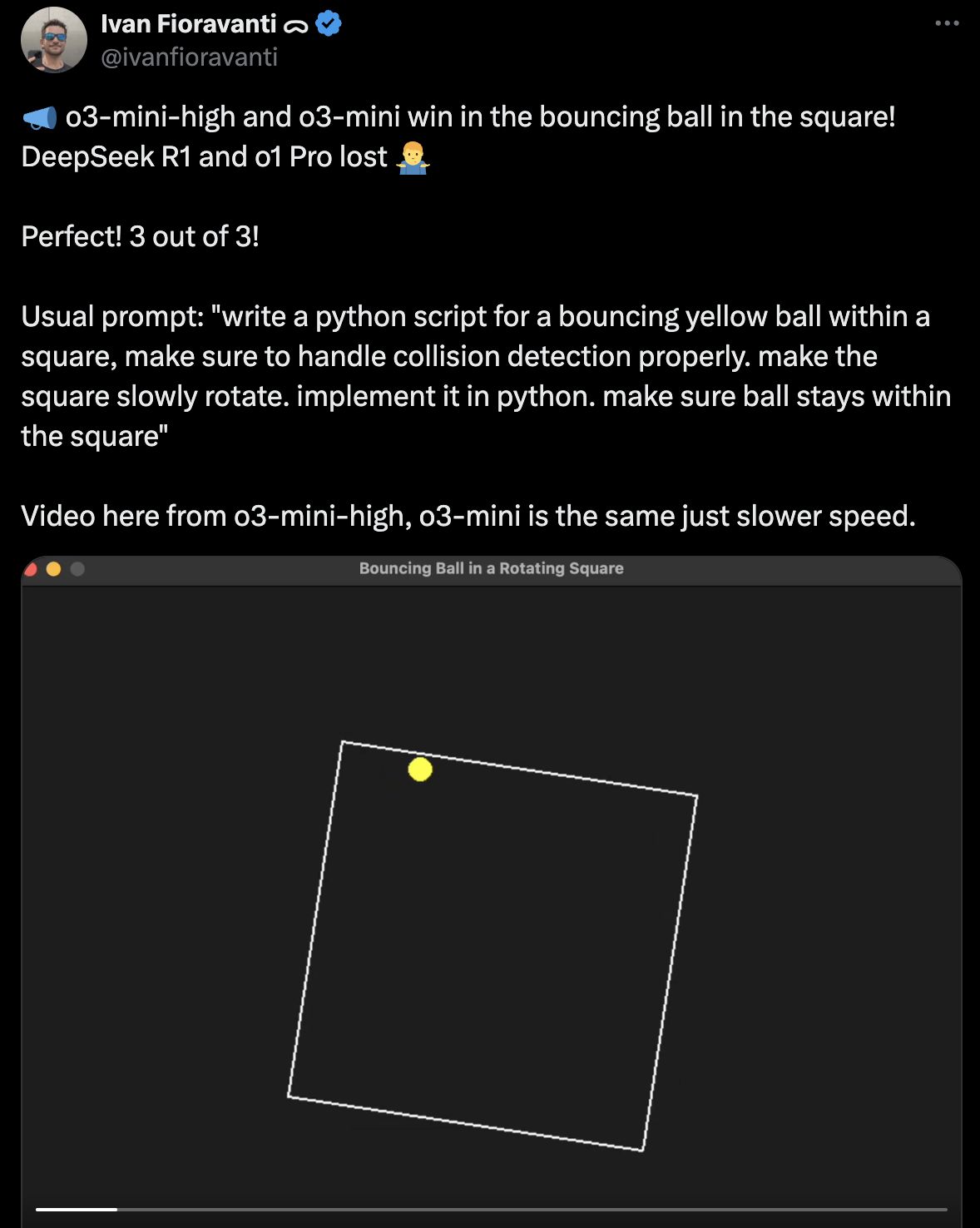

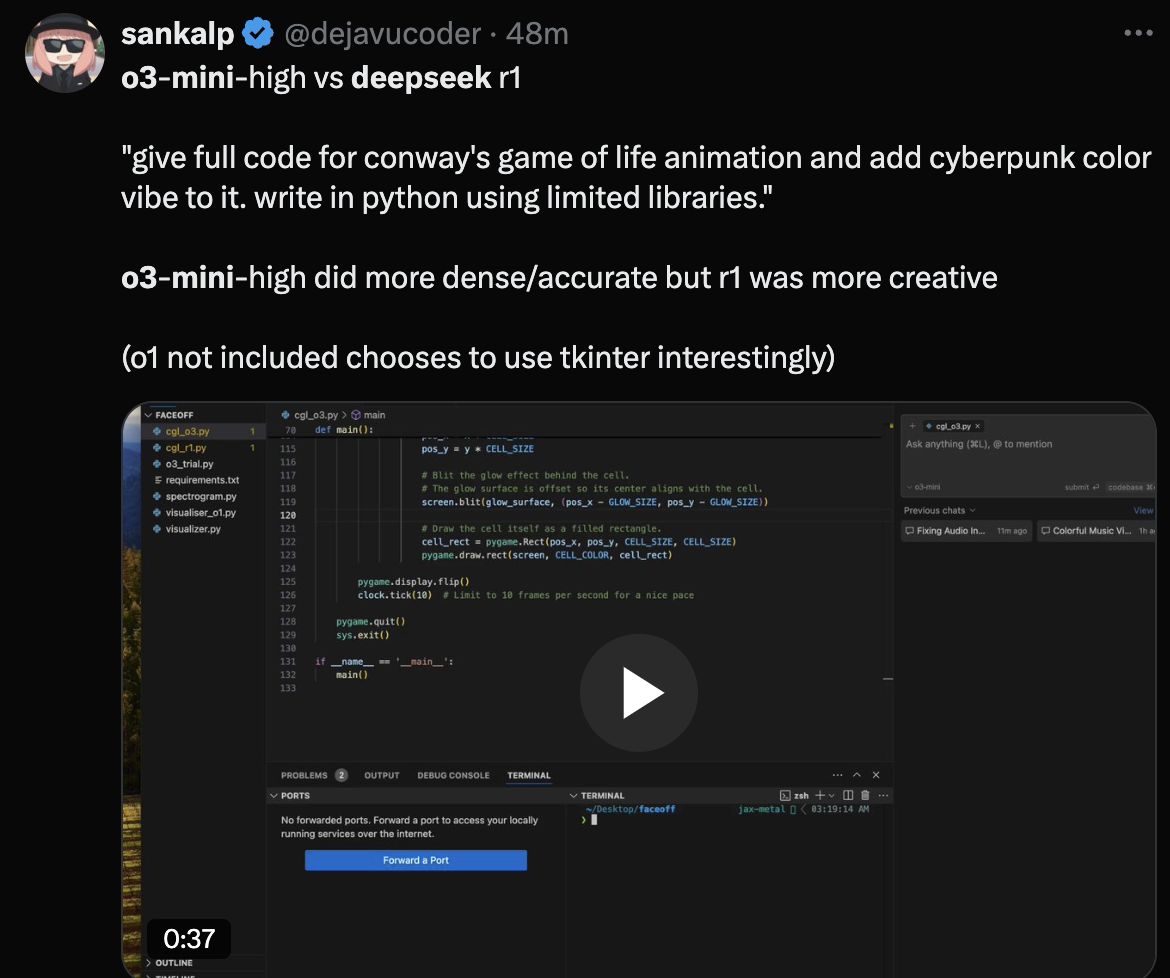

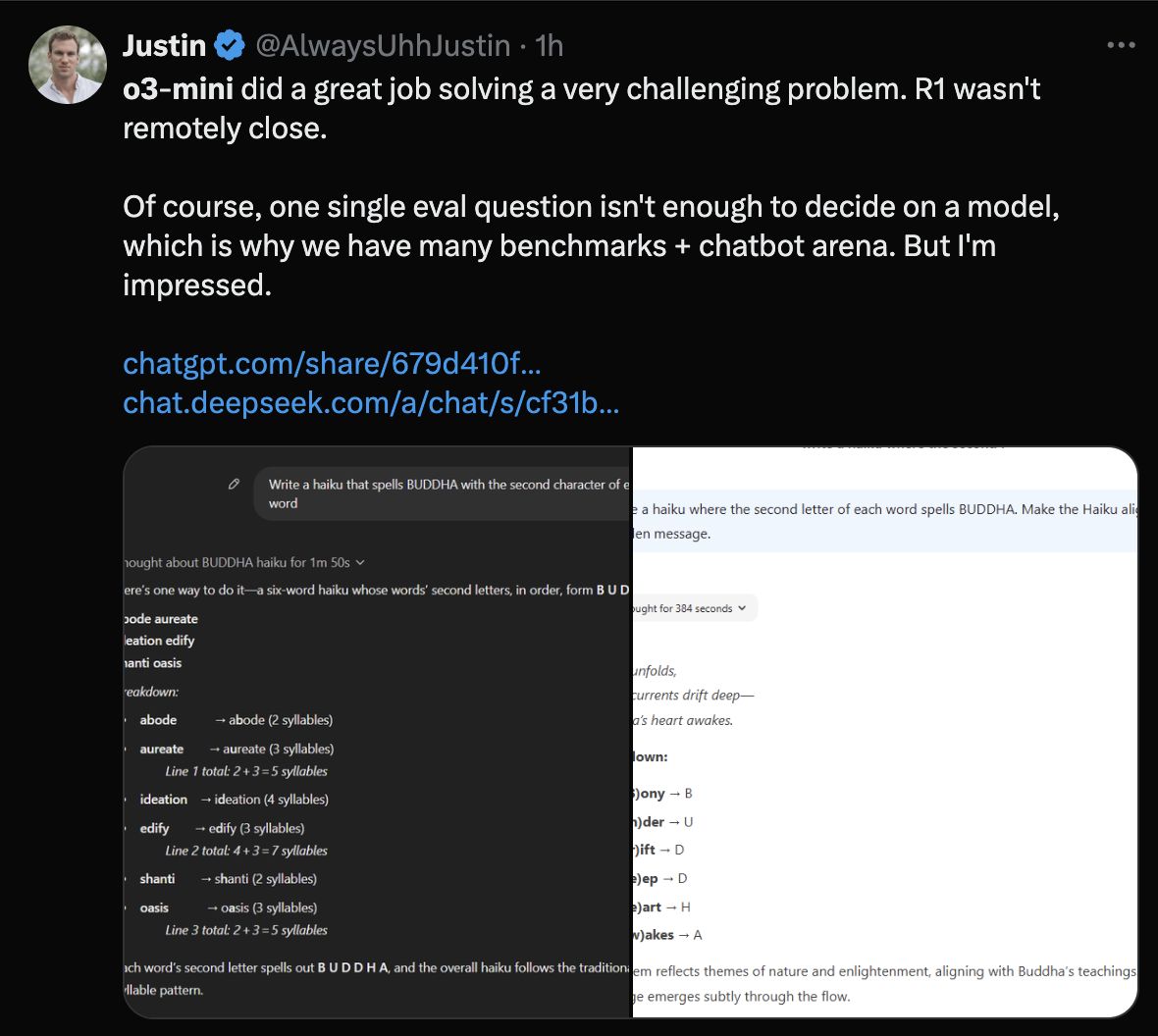

o3-mini demonstrated faster inference speeds and more stable performance in structured, multi-turn dialogues, according to real-world user reports. While it trails DeepSeek R1 in specialized math benchmarks, developers described it as “predictable and polished” for routine tasks like code generation and data analysis.

o3-mini demonstrated faster inference speeds and more stable performance in structured, multi-turn dialogues, according to real-world user reports. While it trails DeepSeek R1 in specialized math benchmarks, developers described it as “predictable and polished” for routine tasks like code generation and data analysis.

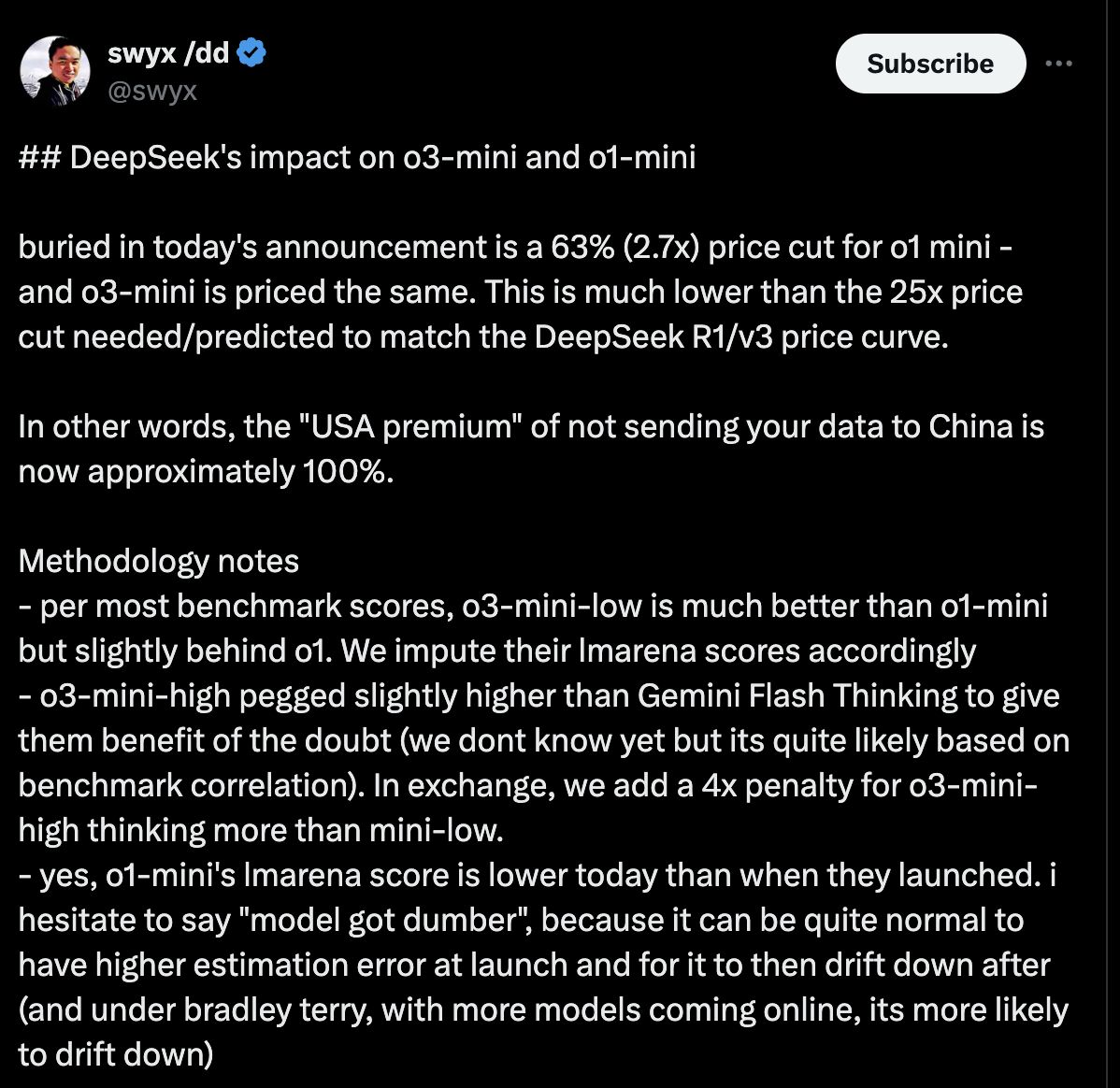

Cost comparison highlights market disruption

DeepSeek R1 emerges as the most affordable option in recent AI model comparisons, undercutting OpenAI’s o3-mini, o1-mini, and o1 models by significant margins. Pricing data reveals stark differences in token costs across competing services.

OpenAI model pricing tiers

OpenAI model pricing tiers

o3-mini costs $1.10 per million input tokens and $4.40 per million output tokens, representing a 63% discount compared to o1-mini and a 93% reduction from the full o1 model’s pricing. A 50% cache discount further lowers o3-mini’s effective rates for eligible users.

DeepSeek’s aggressive pricing strategy

DeepSeek R1 charges $0.14 per million input tokens and $0.55 per million output tokens – approximately 87% cheaper than o3-mini’s base rates. This makes R1:

• 96% cheaper than o1-mini’s equivalent pricing

• 99% cheaper than the full o1 model’s original costs

The pricing gap persists even when applying o3-mini’s cache discount: At $0.55/$2.20 per million tokens (input/output), o3-mini remains nearly 4x more expensive than R1’s undiscounted rates. Developers on X called it a “game-changer” for startups and academic projects requiring high-performance AI without licensing fees.

o3-mini’s pricing aligns with OpenAI’s commercial models but includes access to ChatGPT’s enterprise tools, including SOC 2 compliance and granular usage controls. Enterprise users justify the higher cost by citing reduced deployment complexity and built-in security features.

o3-mini’s pricing aligns with OpenAI’s commercial models but includes access to ChatGPT’s enterprise tools, including SOC 2 compliance and granular usage controls. Enterprise users justify the higher cost by citing reduced deployment complexity and built-in security features.

Ease of use: Accessibility vs customization

o3-mini dominates in usability with its ChatGPT-style interface, free tier for basic users, and API requiring minimal coding expertise. Non-technical testers completed integration in under 30 minutes, praising its “intuitive design” for rapid prototyping.

DeepSeek R1 demands technical proficiency for deployment, requiring users to manage infrastructure and fine-tune models via code. While developers appreciate its flexibility, small teams report spending “hours troubleshooting configurations” to optimize outputs.

DeepSeek R1 demands technical proficiency for deployment, requiring users to manage infrastructure and fine-tune models via code. While developers appreciate its flexibility, small teams report spending “hours troubleshooting configurations” to optimize outputs.

Community reactions show split preferences

X users highlight DeepSeek R1’s affordability and transparency. Critics cite “awkward formatting” and weaker performance in creative writing tasks compared to o3-mini.

o3-mini earns praise for reliability, but skeptics argue its cost prohibits scaling, while some developers lament limited control over model behavior compared to open-source alternatives.

o3-mini earns praise for reliability, but skeptics argue its cost prohibits scaling, while some developers lament limited control over model behavior compared to open-source alternatives.

Final verdict: Specialists vs generalists

Technical teams favor DeepSeek R1 for its math prowess, open-source code, and radical cost savings, despite steeper learning curves. Businesses and casual users prefer o3-mini’s plug-and-play functionality and seamless integration with existing OpenAI workflows.

Community discussions underscore a growing divide: DeepSeek R1 attracts researchers and developers prioritizing raw performance, while o3-mini retains enterprises and individuals valuing stability and ease of adoption.

Community discussions underscore a growing divide: DeepSeek R1 attracts researchers and developers prioritizing raw performance, while o3-mini retains enterprises and individuals valuing stability and ease of adoption.